Reznick (2007) (see previous post) simplified Robinson simplification of Hilbert construction of positive polynomials that are not sums of squares by his perturbation lemma. Blekherman shows that the mystery is in the dimension of monomial basis. For example, there are  points in n-dimensional hypercube, but since there are only

points in n-dimensional hypercube, but since there are only  quadratic monomials all this points when evaluated cannot have rank more then the number of monomials. As a consequence, it is sufficient to nullify only about quaratic number of points in a quadratic form (by applying a set of linear constraints on the coefficients arising from evaluations of values of monomials) to nullify all points in a hypercube for these quadratic forms.

quadratic monomials all this points when evaluated cannot have rank more then the number of monomials. As a consequence, it is sufficient to nullify only about quaratic number of points in a quadratic form (by applying a set of linear constraints on the coefficients arising from evaluations of values of monomials) to nullify all points in a hypercube for these quadratic forms.

There are much more monomials in quartic polynomials (much larger basis), and therefore much larger freedom in choosing coefficients such that quartic will be nullified at quadratic number of points in hypercube, but will be nonzero on other points of a hypercube! That lead that such quartic polynomial even if it will be positive will not be a sum of squares.

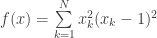

Reznick perturbation lemma open a way to automatically (algorithmically) generate positive polynomials that a re not sums of squares, which in the current version may not lead to sufficient expansion of positive polynomial cone to solve all the problems from a given class. Reznick (2007) perturbation lemma, simplified for our current purpose, in high school terms, starts from a sums of squares of terms defining hypercube, say  is

is  . Moreover, at the minimum it round, i.e. Hessian is strictly positive definite (easy to check by direct calculations, intuitively each term in the sum around the minimum is a positive definite quadratic multiplied by approximately 1). Now we are going to perturb this quartic by another quartic (

. Moreover, at the minimum it round, i.e. Hessian is strictly positive definite (easy to check by direct calculations, intuitively each term in the sum around the minimum is a positive definite quadratic multiplied by approximately 1). Now we are going to perturb this quartic by another quartic ( ), splitting points in the hypercube into two sets. In one set we require that Taylor expansion of

), splitting points in the hypercube into two sets. In one set we require that Taylor expansion of  vanish to second order term, and on the other set we require that

vanish to second order term, and on the other set we require that  is strictly positive. Only those points in the hypercube are dangerous, since perturbation

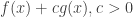

is strictly positive. Only those points in the hypercube are dangerous, since perturbation  may lead to negative values around minima for any positive

may lead to negative values around minima for any positive  . In the rest of the space we can choose small enough

. In the rest of the space we can choose small enough  to ensure positiveness of resulting polynomial (I skip this part, but one need to have worst estimate for the values of

to ensure positiveness of resulting polynomial (I skip this part, but one need to have worst estimate for the values of  for a given norm of

for a given norm of  and take a region bounded by some norm and outer region where only ratios between leading coefficients matter). For the first set of points

and take a region bounded by some norm and outer region where only ratios between leading coefficients matter). For the first set of points  has Hessians with the finite eigenvalues. If we need to ensure that c times minimum eigenvalue is smaller that Hessian eigenvalues (all the same) for

has Hessians with the finite eigenvalues. If we need to ensure that c times minimum eigenvalue is smaller that Hessian eigenvalues (all the same) for  in the minima. That ensures 0 value on the set that are local minima. For the second set of points the value is positive in some neighborhood by construction.

in the minima. That ensures 0 value on the set that are local minima. For the second set of points the value is positive in some neighborhood by construction.

Now automatic construction: given monomial basis we need to ensure about quadratic number of points to have 0 values and gradients being 0. That is about  conditions for about

conditions for about  monomials. The rest of points in the hypercube should be strictly greater than zero. For that we need to ensure that the values at the basis points are positive and that all point in the hypercube are represented by the linear combinations with positive coefficients. For that we need to peak as the basis points the points with minimum number of ‘1’ as coordinates. and the second condition is the inequality condition. leading to linear program. It has solution because we have coefficients in front of

monomials. The rest of points in the hypercube should be strictly greater than zero. For that we need to ensure that the values at the basis points are positive and that all point in the hypercube are represented by the linear combinations with positive coefficients. For that we need to peak as the basis points the points with minimum number of ‘1’ as coordinates. and the second condition is the inequality condition. leading to linear program. It has solution because we have coefficients in front of  that can grow unbound, and being compensated by other terms. and inequality condition is much weaker then equality condition. Then one can compute the estimate for the constant c, which depends only on the calculations in the basis points. The exact machinery still need to be worked out, since one need also estimate the values of projections of all point of hypercube onto basis points.

that can grow unbound, and being compensated by other terms. and inequality condition is much weaker then equality condition. Then one can compute the estimate for the constant c, which depends only on the calculations in the basis points. The exact machinery still need to be worked out, since one need also estimate the values of projections of all point of hypercube onto basis points.

That may be not sufficient to produce wide enough expansion of SOS cone to solve all instances of, say, partition problem, but we may need small enough extension to pertube problem quartic to be represented by the sums of squares.

Sincerely yours,

C.T.

PS

psd that is not SOS:

and the (not cleaned) code in Matlab with symbolic toolbox to get it

%% mupad test for positive polynomials Hilber-Reznik

n=3;

y= evalin( symengine, sprintf( 'combinat::compositions(4, Length= %d, MinPart=0)', n+1) );

pow= arrayfun( @(k) double(y(k)), 1:numel(y), 'UniformOutput', false );

pow= cat(1, pow{:});

xs= sym( 'x', [n,1]);

xx= zeros( 2^n, n );

v=0:2^n-1;

for k=1:n,

xx( bitand(v, 2^(k-1) )~=0 , k )=1;

end

%xx= 2*xx-1;

xx(:, n+1)=1;

%xx( end+1, :)= [1 2 2 1];

y= evalin( symengine, sprintf( 'combinat::compositions(2, Length= %d, MinPart=0)', n+1) );

pow2= arrayfun( @(k) double(y(k)), 1:numel(y), 'UniformOutput', false );

pow2= cat(1, pow2{:});

yy2= nan( size( pow2,1), size(xx,2) );

yy= nan( size( pow,1), size(xx,2) );

for k=1:size(xx,1),

yy(:, k)= prod( bsxfun( @power, xx(k,:), pow ), 2);

yy2(:, k)= prod( bsxfun( @power, xx(k,:), pow2 ), 2);

end

for k=size( pow, 1 ):-1:1,

xsm( k )= sym(1);

for k2= n:-1:1,

xsm( k )= xsm( k )*xs(k2)^pow( k, k2 );

end

end

for k= n:-1:1,

dxsm(k,:)= diff( xsm, xs(k) );

end

r= rank(yy2);

x0Idx= 1:7;

x1Idx= 8;

%we need also condition for gradient here

dpow= zeros(size(pow));

dpow(:,end)=1;

for k=1: size( pow, 2)-1,

idx= pow(:,k)>0;

dpow( idx, end )= dpow(idx, end).*pow(idx,k);

dpow(idx,k)= pow(idx,k)-1;

end

% %

% equality constraints

lc= zeros(size(pow, 1), 0);

for k=1:7,

lc(:, end+1)= subs( xsm, xs, xx(k, 1:3) )';

lc(:, end+(1:n))= subs( dxsm, xs, xx(k, 1:3) )';

end

% lc(:, end+(1:n))= subs( dxsm, xs, xx(8, 1:3) )';

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%

% The following line is a place to play with different polynomials

% we have rather strange way to do it here by imposing eight point

% where quartic polynomial vanishes first order term on Taylor expansion,

% and the value at this point is positive (it is sufficient to have 0 here,

% but the code would be more complicated).

% removing second line below (lc .. ) and switching next lc lines

% (commenting/ uncomenting) lead to Robinson polynomial

%

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

x2= [3 -3 3]; % [3 -1 3] also works well

lc(:, end+(1:n))= subs( dxsm, xs, x2 )'; % comment for Robinson

bc= zeros( size( lc, 2), 1 );

% lc(:, end+1)= subs( xsm, xs, xx(8, 1:3) )'; % uncomment for Robinson

lc(:, end+1)= subs( xsm, xs, x2 )'; % comment for Robinson

bc(end+1)=1;

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

cf= mldivide( lc', bc)*54*2 % multiplier 1 for Robinson

disp( [size(lc) rank(lc)])

% %

% coefficient c in perturbation lemma was found by hand, and in now way is optimal

p2= 1/1000*sum( round(cf).*xsm.') + sum( xs.^2.*(xs-1).^2) % first factor is 1 for Robinson

dp2= [diff(p2, xs(1)) diff(p2, xs(2)) diff(p2, xs(3))]

subs( p2, xs, xx(8, 1:3))

sol= solve(dp2)

xv= ([sol.x1 sol.x2 sol.x3]);

vf= arrayfun( @(k) double(subs( p2, xs, xv(k, :))), 1:size(xv,1))';

[min(vf) min(real(vf)) min(imag(vf))]

, where we know

and want to find prime factors

and

.

, than we can write

.

, since multiplication group modulo prime is cyclic for each

there is unique

satisfying the equation. In other words we need to test

values to find correct one, if we can. From now on I assume

satisfy

and therefore

divides

.

, so we can write

since

. In other words

. Substituting it into original equation we get

. Summarizing,

.

.

where

is a univariate polynomial on

of degree

Substituting this into original equation we will get modular univariate polynomial equation of degree

. We need to factorize it and check

values of resulted

.